- create the bare bones resource

- assign attributes / users / templates

- commit the version (ready for use)

- Userid is fetched from configuration object.

- Action doActionProjectVersionOfAuthEntity is passed

type="assign"action with array of version ids. - Retrieves all custom tags for the application version

- Retrieves a custom tag based on its ID and appends it to custom tag list

- Updates the custom tags list for the application version

- Create the local user.

- Assign to the version id defined in the config file.

- Get the current assigned auth entities for version

config.sampleSecondaryVersionIdand save result. - Assign new auth entities from

config.sampleAuthEntitiestoconfig.sampleSecondaryVersionId. - Revert back to original state of version by re-assigning the same entities saved from the response in step 1.

- creates a new attribute definition of type single input (text)

- splits the versions into batches, each firing an attribute value assignment request in parallel. Once responses are returned for all versions in a batch, the next batch is sent out.

- Generate a download file token.

- Pass the token to the download endpoint in a query param and perform a standard http file dowload.

- Generate a file token.

- Pass the token to the upload endpoint in a query param along with a multipart form upload request.

- Schedule a report for processing

- Track the report until processing is complete

- Download the report.

- Find the report definition id based on name.

- Generate a DISA STIG report.

-

Call to obtain a token using basic http auth.

a. in the sample code, a “UnifiedLoginToken” is generated for use with subsequent calls. It is generated with a short-lifetime of 0.5 days which is quite sufficient for the small set of operations. The token will be automatically deleted by the system sometime after it expires. This approach may be suitable for one-off scripts.

b. Alternately, if the script is used for a daily automation, the username/pwd may not be available for each run. In that case, a long-lived token (lifetime of several months or more) such as “AnalysisUploadToken” or “AuditToken” should be generated offline using the REST api or the legacy “fortifyclient” tool. This token must be persisted and will be re-used by each daily run of the automation.

- Make all subsequent calls to SSC passing that token.

- Cleanup any tokens that are not needed for the next run of the automation. And always re-use long-lived tokens.

What’s in the box?

Mocha

Mocha is a testing framework that allows the running of test suites comprised of test cases. Normally a [component]_spec.js is one test suite that performs test for that component. This project consists of a few spec files that perform different tasks on SSC via the REST API.

Swagger

Swagger allows the generation of code from a spec file that SSC provides. SSC hosts a spec file generated based on the API calls exposed.

Swagger-js

This project uses the js implementation of code generation based on the spec file. See Swagger for more detail (we use version 2.x since SSC exposes that version, different than 3.x).

...

//restClient.js

new Swagger({

url: `${config.sscAPIBase}/spec.json`, //this loads the spec file from SSC

usePromise: true,

}).then((api) => {

console.log("successfully loaded swagger spec. Attempting to call heartbeat /features ");

that.api = api;

async.waterfall([

function getToken(callback) {

...

Prerequisites

Node + NPM > 6.11

Installation

npm install

or

yarn install

Usage

Environment Variables

Configuration parameters required for these samples to run, need to be set as environment variables. These parameters include SSC URLs and credentials.

For convinience the dotEnv node module is used.

In the root of the project createa file named .env.

Edit the .env file and defined the environment variables. These will get picked up by Node and be avaliable to the running sample.

//.env

// no trailing slash e.g., http://localhost:8080/ssc

SSC_URL=http[s]://[host]:[port]/[ssc_context]

SSC_USERNAME=changeme

SSC_PASSWORD=changeme

//DisableSSLSecurity=true

You can also simply define these environment variables in the shell that is launching the app.

config.js

Edit the config.js file and set params.

{

versionIdCSV: "aa-version-sample.csv", //filename with comma seperated application version ids

batchSize: 2, //rest call parrallel batch size - how many version to call in parallel in one batch (batches executed sequentually)

sampleFPR: '/fpr/Logistics_1.3_2013-01-17.fpr',

sampleVersionId: 2,

downloadFolder: "/downloads"//relative to root project, wil be created if non existant

}

Run

To see the full list of scripts, view the package.json file and look in the scripts property.

"scripts": {

"predict": "mocha --compilers js:babel-core/register test/auditAssistantBatchPredict_spec.js",

"train": "mocha --compilers js:babel-core/register test/auditAssistantBatchTraining_spec.js",

"createVersion": "mocha --compilers js:babel-core/register test/createVersion_spec.js"

...

},

SSL You might encounter an UNABLE_TO_VERIFY_LEAF_SIGNATURE problem running this tool against a deployment of SSC running on SSL. To bypass security checks in this tool, set an environment variable called DisableSSLSecurity, and then run the script. For example, in a Windows command window:

set DisableSSLSecurity=true

npm run createVersion

Using Swagger to Make a Call to SSC

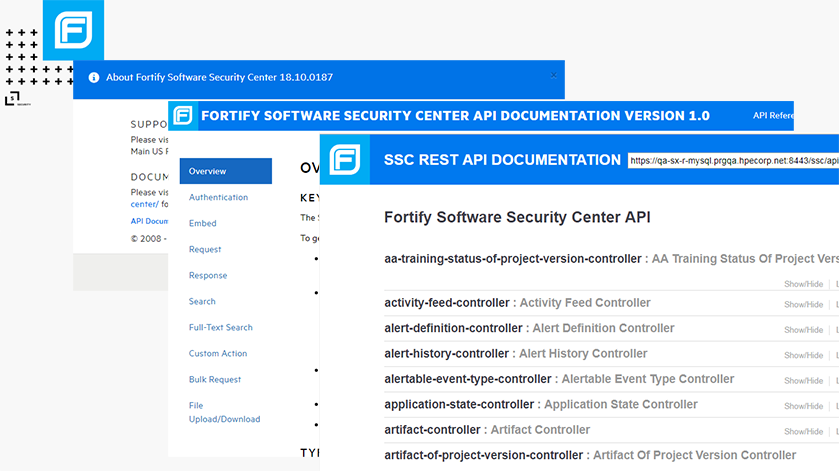

Step 1 - Identifying the controller

Use the Swagger UI that is integrated with SSC to view the list of controllers. See the image above. This example shows how to make a call to send a version to Audit Assistant for training. The same steps apply to any RESTful action.

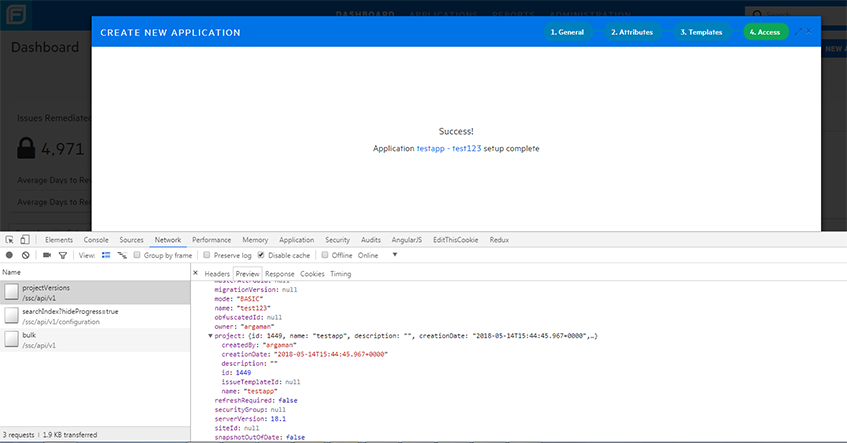

Note: An easy way to figure out what controller or what set of API calls are needed to complete an action is to look at what the UI is doing. For example, to create a version that is considered “committed” (ready for use), two actual calls are needed. The UI uses a utility API called the Bulk API. Using chrome dev tools you can see exactly what is being sent. That should allow you to identify what controllers are in use and what Swagger code you need to use.

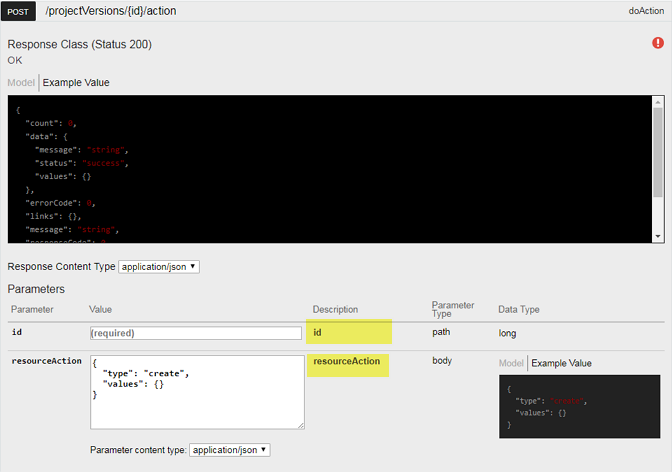

Step 2 - Using Swagger UI to construct a request

See this image for the Action API that can be performed for an application version (http//…resource/action). The parameters that this API accepts are highlighted.

Now for the code:

//restClient.js

/**

* send for prediction or training or future actions when implemented

* @param {*} versionId

* @param {*} actionType //i.e SEND_FOR_TRAINING

*/

sendToAA(versionId, actionType) {

...

restClient.api["project-version-controller"].doActionProjectVersion({

"id": versionId, resourceAction: { "type": actionType }

}, ...).then((resp) => {

...

});

}

You will see that the id and resourceAction match the names of the fields highlighted in the image above.

We are constantly working on improving the Swagger-generated documentation to accurately match the fields names, required nested fields, and so on. If you are missing information, please see the previous note about using the Chrome dev tools to track UI calls.

Handling the result

Two options are available for handling handle results: using promises or callbacks. This example uses promises. The default is not to use promises. Here is where they are enabled:

new Swagger({

...

usePromise: true,

}).then((api) => {

...

When using promises, the pattern used is:

controller.apiCall(..).then(successCallback).catch(errorCallback)

Overview

Creating a version in SSC is a three-part process.

One optional fourth step can be added if the

options.copyCurrentStateis set totrue, to copy application state from an existing version.

async.waterfallis not covered here. If you want to know more about it, see the Authentication section, which explains it better.

The following code blocks show the bare minimum required to create a working application version in SSC. There are many assignable parameters, such as bug tracker integrations, custom tags, and so on.

Creating the version resource

At a minimum, a version must include some basic naming info and the application name (application is referred to as “project” in legacy releases). The application is a logical container for versions to be used for reporting / filtering / grouping, and so on (for example, Application=Office, version=95, version-2017)

//restClient.js

restClient.api["project-version-controller"].createProjectVersion({

resource: {

"name": options.name,

"description": options.description,

"active": true,

"committed": false,

"project": {

"name": options.appName,

"description": options.appDesc,

"issueTemplateId": options.issueTemplateId

},

"issueTemplateId": options.issueTemplateId

}

Assigning attributes

Each version has a default set of attributes assigned to it. Some of these are mandatory to commit the version to SSC.

To find out which attributes your SSC instance has set up, log in to SSC, go to the Administraton view, and then look under Templates.

In this case, we pass to the data parameter the options.attributes.

//restClient.js

function assignAttributes(version, callback) {

restClient.api["attribute-of-project-version-controller"].updateCollectionAttributeOfProjectVersion({

parentId: version.id,

data: options.attributes

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

callback(null, version, resp.obj.data);

}).catch((err) => {

callback(err);

});

},

If you look at createVersion_spec.js you will see that the following is passed:

//createVersion_spec.js

{

...

attributes: [

{ attributeDefinitionId: 5, values: [{ guid: "New" }], value: null },

{ attributeDefinitionId: 6, values: [{ guid: "Internal" }], value: null },

{ attributeDefinitionId: 7, values: [{ guid: "internalnetwork" }], value: null },

{ attributeDefinitionId: 1, values: [{ guid: "High" }], value: null }

]

}

Committing the version

Once the required attributes are set on the newly-created resource, you can commit the version.

Uncommitted versions are not usable in the UI. For example, artifacts cannot be uploaded.

//restClient.js

function commit(version, attrs, callback) {

restClient.api["project-version-controller"].updateProjectVersion({

id: version.id,

resource: { "committed": true }

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

callback(null, resp.obj.data);

}).catch((err) => {

callback(err);

});

}

Copy current state (optional)

if the options.copyCurrentState is set to true, one additional call will be added to the waterfall queue.

This call tells the server to copy apllication state (issues) from an existing version (in this case config.sampleVersionId).

Notice the previousProjectVersionId: config.sampleVersionId which is passed to the action command.

//restClient.js

//if copyCurrentState is passed then add a funnction to copy state

//this will activate a background process on the server to copy over issues.

if (options.copyCurrentState === true) {

waterfallFunctionArray.push(function (version, callback) {

restClient.api["project-version-controller"].doActionProjectVersion({

"id": version.id, resourceAction: {

"type": "COPY_CURRENT_STATE",

values: { projectVersionId: version.id, previousProjectVersionId: config.sampleVersionId, copyCurrentStateFpr: true }

}

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

if (resp.obj.data.message.indexOf("failed") !== -1) {

callback(new Error(resp.obj.data.message))

} else {

callback(null, version);

}

}).catch((error) => {

reject(error);

});;

});

}

Overview

Assign a user based on user id to multiple versions in a single API call.

////assignUserToVersions_spec.js

const userId = config.sampleUserId;

const requestData = {"type":"assign","ids":[config.sampleVersionId, config.sampleSecondaryVersionId]};

restClient.assignUserToVersions(userId,requestData).then((resp) => {

console.log(chalk.green(`successfully assigned userid ${userId} to versions [${config.sampleVersionId} , ${config.sampleSecondaryVersionId}]`));

...

//restClient.js

restClient.api["project-version-of-auth-entity-controller"].doActionProjectVersionOfAuthEntity({

parentId: userId,

collectionAction: requestData

...

Overview

The test case described in this article does the following:

The following sections describe how to perform these steps.

Obtaining all Custom Tags for an Application Version

To obtain a list of all custom tags associated with an application version, run the following:

//updateCustomTagsOfVersion_spec.js

...

let customTags = [];

it('get all the custom tags for a project', function (done) {

restClient.getAllCustomTagsOfVersion([config.sampleVersionId]).then((response) => {

customTags = response;

console.log(chalk.green(`successfully got all the custom tags for the project version with ID ${config.sampleVersionId}`));

done();

}).catch((err) => {

console.log(chalk.red("error getting all the custom tags for the project version with ID:" + config.sampleVersionId), err)

done(err);

});

});

...

//restClient.js

...

getAllCustomTagsOfVersion(versionId) {

const controller = this.api["custom-tag-of-project-version-controller"];

return controller.listCustomTagOfProjectVersion({ 'parentId': versionId }, getClientAuthTokenObj(this.token)).then((response) => {

return response.obj.data;

});

}

...

The variable customTags stores the custom tags.

Obtaining a Custom Tag and Adding it to the Custom Tag List

Next, you obtain the custom tag object based on its the ID. Once you have the complete object, you can append it to the application version’s custom tags list. The variable customTags stores the custom tags list with the new custom tag object appended.

To obtain the custom tag object based on its ID:

//updateCustomTagsOfVersion_spec.js

...

it('get the specific custom tag that we want to add and append to final list', function (done) {

restClient.getCustomTag([config.sampleCustomTagId]).then((response) => {

customTags.push(response.obj.data);

console.log(chalk.green(`IDs of custom tag list with new appended element: ` + customTags.map(elem => elem.id).join(", ")));

done();

}).catch((err) => {

console.log(chalk.red("error appending the selected custom tag to the list"), err)

done(err);

});

});

...

//restClient.js

...

getCustomTag(customTagId) {

const controller = this.api["custom-tag-controller"];

return controller.readCustomTag({ 'id': customTagId }, getClientAuthTokenObj(this.token)).then((response) => {

return response;

});

}

...

Updating the Custom Tags List for an Application Version

To update the custom tags list for the application version:

//updateCustomTagsOfVersion_spec.js

...

it('update the list of custom tags', function (done) {

restClient.updateCustomTagsOfVersion([config.sampleVersionId], customTags).then((response) => {

console.log(chalk.green(`successfully got all the custom tags for the project version with ID ${config.sampleVersionId}`));

done();

}).catch((err) => {

console.log(chalk.red("error updating the list of custom tags for the project version with ID:" + config.sampleVersionId), err)

done(err);

});

});

...

//restClient.js

...

updateCustomTagsOfVersion(versionId, customTagList) {

const controller = this.api["custom-tag-of-project-version-controller"];

return controller.updateCollectionCustomTagOfProjectVersion({ 'parentId': versionId, 'data': customTagList}, getClientAuthTokenObj(this.token)).then((response) => {

return response;

});

}

...

Note that the API ignores any duplicate custom tags in the list.

Overview

This test case will create a local SSC user and then assign to an existing application version.

Creating the local user

A timestap is generated and appended to username, first and last name to differentiate the users being created between executions of the test.

//createLocalUserAndAssignToVersion_spec.js

...

const localUser = {

"requirePasswordChange": false,

"userName": "newuser-"+timeStamp,

"firstName": "new",

"lastName": "user" +timeStamp,

"email": "newuser" + +timeStamp + "@test.com",

"clearPassword": "Admin_12",

"passwordNeverExpire": true,

"roles": [{

"id": "securitylead"

},

{

"id": "manager"

},

{

"id": "developer"

}]

};

...

//restClient.js

...

restClient.api["local-user-controller"].createLocalUser({

user: localUser

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

...

Assigning the User

Similar to the Assign User to Versions Scenario.

the localUserEntity is saved from the previous test case.

//createLocalUserAndAssignToVersion_spec.js

...

it('assign user to version', function (done) {

const requestData = {"type":"assign","ids":[config.sampleVersionId]};

if(!localUserEntity) {

return done(new Error("No local user created."));

}

let userId = localUserEntity.id;

restClient.assignUserToVersions(userId,requestData).then((resp) => {

console.log(chalk.green(`successfully assigned user to version ${config.sampleVersionId}`));

done();

...

Overview

Auth entities are a representation of a local user, LDAP user or LDAP group.

This test cases will get auth entities (see below) for a version, assign new entities to the same version and then revert back to original state.

A

PUT(update) operation on version auth entities replaces the whole list of assigned auth entities, no merges are done.

Get Version Assigned Auth Entities

Get the list of auth entities and save to originalAuthEntities

//restClient.js

...

restClient.api["auth-entity-of-project-version-controller"].listAuthEntityOfProjectVersion({parentId: pvId},

getClientAuthTokenObj(restClient.token))

...

//assignAppVersionToAuthEntities_spec.js

...

originalAuthEntities = resp

...

Assign New Auth Enitities

Pass a list of auth entities (represents a local user / LDAP user or LDAP group) to the API call.

//config.js

...

sampleAuthEntities: [{"id": 12, "isLdap": false}, {"id": 9, "isLdap": false}, {"id": 6, "isLdap": false}]

...

//restClient.js

...

restClient.api["auth-entity-of-project-version-controller"].updateCollectionAuthEntityOfProjectVersion({

parentId: pvId,

data: arrayOfAuthEntities //== sampleAuthEntities

}, getClientAuthTokenObj(restClient.token)

).then((resp) => {

...

Revert

Re-assign the auth entities saved in the previous test in originalAuthEntities to the same version.

//restClient.js

...

restClient.api["auth-entity-of-project-version-controller"].updateCollectionAuthEntityOfProjectVersion({

parentId: pvId,

data: arrayOfAuthEntities //== originalAuthEntities

}, getClientAuthTokenObj(restClient.token)

).then((resp) => {

...

Overview

Attribute are created in the SSC administration section under Templates -> Attributes.

The script:

To learn more about the batch functionality refer to the

Batch Predictsection.

Setup

This script creates an attribute with a random name and then saves it’s Id for assignment part.

An easy way to get the list of existing attribute defenitions is to run an HTTP GET API call to [host:port]/[ssc context]/api/v1/attributeDefinitions.

Sniffing the http trafic in the UI is also possible to get the attribute id.

The Id from the create step of the script will be used in the assignment part wuth the sampleAttributeValue. For example:

sampleAttributeValue: [{"attributeDefinitionId":29,"values":null,"value":"#sandbox_sample_guid"}]

The following code will run through the csv file (defined int he config.js file) and for each version will assign the attribute value my special value to attribute 29.

//restClient.js

/**

* create attribute definition

* @param {*} definition - attribute definition json payload (see config.js)

*/

createAttributeDefinition(definition) {

const restClient = this;

return new Promise((resolve, reject) => {

if (!restClient.api) {

return reject("restClient not initialized! make sure to call initialize before using API");

}

restClient.api["attribute-definition-controller"].createAttributeDefinition({

resource: definition

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

console.log("attribute " + resp.id + " created successfully!");

resolve(resp.obj.data); //return attribute definition object

}).catch((err) => {

reject(err);

});

});

}

/**

* Assign an attribute to a version with value

* @param {*} versionId

* @param {*} attributes

*/

assignAttribute(versionId, attributes) {

const restClient = this;

return new Promise((resolve, reject) => {

if (!restClient.api) {

return reject("restClient not initialized! make sure to call initialize before using API");

}

restClient.api["attribute-of-project-version-controller"].updateCollectionAttributeOfProjectVersion({

parentId: versionId,

data: attributes

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

console.log("versionid " + versionId + " updated successfully!");

resolve(versionId);

}).catch((err) => {

reject(err);

});

});

}

//assignAttributeVersions_spec.js - second part of script to assign values.

it('batch assigns a value to an attribute on multiple versions', function (done) {

commonTestsUtils.batchAPIActions(done, "assignAttribute", config.sampleAttributeValue);

});

Overview

Application versions can be sent for audit predictions through the Fortify Audit Assistant service. This script allows the sending of multiple batches of versions to the prediction service via SSC.

The script splits the versions into batches, each firing in parallel prediction requests on its own. Once responses are returned for all versions in a batch, the next batch is sent out.

A sample run would look something like this:

//config.batchSize = 3

//versionIdCSV = 5432, 2123, 10, 653, 232, 123

----10:00:00----

Batch 1

predict 5432 //versionid=5432

predict 2123

predict 10

//all responses come back

----10:01:00----

Batch 2

predict 653

predict 232

predict 123

This document will not go through the code that is in

commonTestUtils.jsto handle the batch-sending work.

Prediction Action

At a minimum, a version must include some basic naming info and the application name (in legacy releases, an application was referred to as a “project”). The application is a logical container for versions to be used for reporting / filtering / grouping, and so on (for example, Application=Office, version=95, version-2017)

//restClient.js

/**

* send for prediction or training or future actions when implemented

* @param {*} versionId

* @param {*} actionType

*/

sendToAA(versionId, actionType) {

const restClient = this;

return new Promise((resolve, reject) => {

if (!restClient.api) {

return reject("restClient not initialized! Make sure to call initialize before using API");

}

/*

call the action to predict - @type can be "sendForPrediction" or "sendForTraining"

*/

restClient.api["project-version-controller"].doActionProjectVersion({

"id": versionId, resourceAction: { "type": actionType }

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

if (resp.obj.data.message.indexOf("failed") !== -1) {

//legacy

reject(new Error(resp.obj.data.message));

} else {

resolve(resp);

}

}).catch((error) => {

reject(error);

});;

});

}

Overview

Issues can be revireted from an application via the issue-of-project-version-controller.

This section shows a simple retrival of issues with a filter and a way to get all issues from a version in batches.

Get Version Issues in batches

Application versions may have a large number of issues and so for performance reasons it is recommeneded to retrieve them in batches. The following code shows the calling code with the call back that is called for each batch of 20 issues. The promise is resolved when all batches are done.

This sample code is an entry point to some buisiness logic around all or a subset of a version’s issues.

//issues_spec.js

it('get all issues with batching', function (done) {

//3rd parameter is the callback for each batch

restClient.getAllIssueOfVersion(config.sampleVersionId, 20, (issues) => {

console.log("next batch received: \n");

console.log("-----------------------");

console.log(issues.map(elem => elem.primaryLocation).join(", "));

console.log("-----------------------");

}).then((allCount) => {

//ALL DONE

console.log(chalk.green("successfully got a total of = " + allCount + " issues"));

done();

}).catch((err) => {

console.log(chalk.red("error listing issues"), err)

done(err);

});

});

Filter issues using Fortify Search Syntax

The code belows shows how to filter issues of a version by a custom tag with guid = 87f2364f-dcd4-49e6-861d-f8d3f351686b -> 4th value (exploitable) AND issues of category SQL Injections.

11111111-1111-1111-1111-111111111165 is the guid of the Category attribute of an issue.

To get the meta data that includes these guids you can use the issue-selector-set-of-project-version-controller controller.

The filter paramter has the format:

filter=TYPE[typeid]:val,TYPE2[typeid]:val

//issues_spec.js

it('should filter issues ', function (done) {

restClient.getIssues(config.sampleVersionId, 0, "CUSTOMTAG[87f2364f-dcd4-49e6-861d-f8d3f351686b]:4,ISSUE[11111111-1111-1111-1111-111111111165]:SQL Injection").then((response) => {

console.log('filter found:' + (response.obj.data ? response.obj.data.length : 0) + ' issues');

done();

}).catch((err) => {

console.log(chalk.red("error filtering issues"), err)

done(err);

});

});

Overview

Application versions can be sent for training to the Fortify Audit Assistant service. This script allows the sending of multiple batches of versions to the training service through SSC.

The script splits the versions into batches, each firing in parallel training requests on its own. Once all responses for a batch are returned, the next batch is sent out.

A sample run would look something like this:

//config.batchSize = 3

//versionIdCSV = 5432, 2123, 10, 653, 232, 123

----10:00:00----

Batch 1

train 5432 //versionid=5432

train 2123

train 10

//all responses come back

----10:01:00----

Batch 2

train 653

train 232

train 123

This document will not cover the code that is in

commonTestUtils.jsto handle the batch sending work.

Training Action

At a minimum, a version must include some basic naming info and the application name (application is referred to as “project” in legacy releases). The application is a logical container for versions to be used for reporting / filtering / grouping, and so on (for example, Application=Office, version=95, version-2017)

//restClient.js

/**

* send for prediction or training or future actions when implemented

* @param {*} versionId

* @param {*} actionType

*/

sendToAA(versionId, actionType) {

const restClient = this;

return new Promise((resolve, reject) => {

if (!restClient.api) {

return reject("restClient not initialized! Make sure to call initialize before using API");

}

/*

call the action to train - @type can be "sendForPrediction" or "sendForTraininig"

*/

restClient.api["project-version-controller"].doActionProjectVersion({

"id": versionId, resourceAction: { "type": actionType }

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

if (resp.obj.data.message.indexOf("failed") !== -1) {

//legacy

reject(new Error(resp.obj.data.message));

} else {

resolve(resp);

}

}).catch((error) => {

reject(error);

});;

});

}

Overview

Generate an authentication token based on type. Default is to generate a UnifiedLoginToken.

You can set the FORTIFY_TOKEN_TYPE environment variable before running the script to generate the different token types, such as: AnalysisDownloadToken, AnalysisUploadToken, AuditToken, UploadFileTransferToken, DownloadFileTransferToken, ReportFileTransferToken, CloudCtrlToken, CloudOneTimeJobToken, WIESystemToken, WIEUserToken, UnifiedLoginToken, ReportToken, PurgeProjectVersionToken

Getting a file upload token

Using the Auth controller to generate the token. Use basic http auth to generate token

generateToken(type) {

const restClient = this;

return new Promise((resolve, reject) => {

const auth = 'Basic ' + new Buffer(config.user + ':' + config.password).toString('base64');

restClient.api["auth-token-controller"].createAuthToken({ authToken: { "terminalDate": getExpirationDateString(), "type": type } }, {

responseContentType: 'application/json',

clientAuthorizations: {

"Basic": new Swagger.PasswordAuthorization(config.user, config.password)

}

}).then((data) => {

//got it so pass along

resolve(data.obj.data.token)

}).catch((error) => {

reject(error);

});

});

}

Overview

Downloading an FPR file is not currently part of the SSC Swagger spec and, therefore, is not generated in the code API layer.

This tutorial is based on the static documentation you can find in your SSC instance when navigating to

[SSC URL]/

Getting a file download token

File downloads in SSC require a single use auth token. The steps to download an FPR are:

There are two ways to get the token. You can use the method using the fileTokens endpoint (Legacy) mentioned in the static docs, or use Swagger.

We will use Swagger.

see generateToken

/**

* get the single use download token

*/

function getFileToken(callback) {

restClient.generateToken("DownloadFileTransferToken").then(() => {

callback(null, token);

}).catch(() => {

callback(error);

});

},

Download the file

As mentioned previously, this is not an API that is part of the Swagger spec at the moment. URL is created, and then a simple response stream piping to a file is used to save the file from that endpoint.

//restClient.js

/**

* use a simple file download pipe from response to save to downloads folder

*/

function downloadFPR(token, callback) {

const url = config.sscFprDownloadURL + "?mat=" + token + "&id=" + artifactId;

try {

let destFolder = __dirname + "/.." + config.downloadFolder;

createDirectoryIfNoExist(destFolder);

downloadFile(url, destFolder, filename, (err) => {

if (err) {

callback(e);

} else callback(null, destFolder + "/" + filename);

});

} catch (e) {

callback(e);

}

}

Overview

Uploading an FPR is not currently part of the SSC Swagger spec and, therefore, is not generated in the code API layer.

This tutorial is based on the static documentation you can find in your SSC instance when navigating to

[SSC URL]/

Getting a file upload token

File uploads in SSC require a single use auth token. The steps to upload an FPR are:

There are two ways to get the token. You can use the method using the fileTokens endpoint (Legacy) mentioned in the static docs, or use Swagger.

We will use Swagger.

see generateToken

/**

* get the single upload token

*/

function getFileToken(callback) {

restClient.generateToken("UploadFileTransferToken").then(() => {

callback(null, token);

}).catch(() => {

callback(error);

});

},

Uploading the file

As mentioned previously, this is not an API that is part of the Swagger spec at the moment.

This sample uses the node request library to perform the multi part POST request.

//restClient.js

function uploadFile(token, callback) {

//Upload the file. For more information on this in SSC, navigate to [SSC URL]/%lt;app context>/html/docs/docs.html#/fileupdownload

const url = config.sscFprUploadURL + "?mat=" + token + "&entityId=" + versionId;

try {

const formData = {

files: [

fs.createReadStream(__dirname + "/../" + fprPath)

]

};

request.post({ url: url, formData: formData }, function optionalCallback(err, httpResponse, body) {

if (err) {

callback(err);

} else {

if (body && body.toLowerCase().indexOf(':code>-10001') !== -1) {

callback(null, body);

} else {

callback(new Error("error uploading FPR: " + body))

}

}

});

} catch (e) {

callback(e);

}

}

Tracking the status

To track the processing status of the artifact in SSC we will query the job-controller endpoint.

For this example we will just print messages in the different processing phases.

//fprUpload_spec.js

/**

* Poll the uploaded FPR in previous test for processing status

*/

it('poll for status ', function (done) {

if (!artifactJobid) {

return done(new Error("previous upload FPR failed"));

}

/*

* callback for setTimeout. Will use restClient to get the fpr status and then based on returned status either set another timer or stop execution.

*/

function checkStatus() {

const timeoutSec = 2;

restClient.getJob(artifactJobid).then((job) => {

jobEntity = job; //update for next test

let msg;

switch (jobEntity.state) {

case "FAILED":

msg = console.log(`${artifactJobid} failed! project version name: project version id: ${jobEntity.projectVersionId} artifact id: ${jobEntity.jobData.PARAM_ARTIFACT_ID}`);

console.log(chalk.red(msg));

setTimeout(checkStatus, timeoutSec * 1000);

break;

case "CANCELLED":

msg = console.log(`${artifactJobid} canceled! project version id: ${jobEntity.projectVersionId} artifact id: ${jobEntity.jobData.PARAM_ARTIFACT_ID}`);

console.log(chalk.red(msg));

done(new Error(msg));

break;

case "RUNNING":

case "WAITING_FOR_WORKER":

case "PREPARED":

console.log(`${artifactJobid} ongoing!`);

setTimeout(checkStatus, timeoutSec * 1000);

break;

case "FINISHED":

console.log(chalk.green(`${artifactJobid} completed successfully, project version id: ${jobEntity.projectVersionId} artifact id: ${jobEntity.jobData.PARAM_ARTIFACT_ID}`));

done();

break;

}

}).catch((err) => {

let msg = `${artifactJobid} ongoing!`;

console.log(chalk.red(msg));

done(err);

});

}

checkStatus();

});

});

Overview

This doc will show how to do the following:

Schedule a report for processing

Generating a report consists of two steps in this tutorial.

The code can be generalized even further to support other report types, but for the sake simplicity we will pass some parameters specifically to “DISA STIG”. For example,

"type": "ISSUE".

Getting the report definition Object

In the following code, we use the “q” parameter supported by many API calls in SSC. This parameter allows narrowing down of results based on values inside properties of the report definition. Notice the q: "name:" + stigDefName. This will eventually get translated to

http://[ssc]/api/v1/reportDefinitions?q=name:”DISA STIG”

//restClient.js

/**

* Query the server for the definition id based on the name of the report definition uploaded to SSC (the default is usually "DISA STIG")

*/

function getDefinition(callback) {

restClient.api["report-definition-controller"].listReportDefinition({ q: "name:" + stigDefName },

getClientAuthTokenObj(restClient.token)).then((result) => {

if (result.obj.data && Array.isArray(result.obj.data) && result.obj.data.length === 1) {

callback(null, result.obj.data[0]);

} else {

callback(new Error("Could not find " + stigDefName + " report definition on the server, look in the report definition in the admin section"));

}

}).catch((err) => {

callback(err);

});

},

Report definition

The report definition object is a generic description of what parameters this specific report accepts. For example, DISA STIG accepts parameters of type SINGLE_SELECT_DEFAULT with optional values such as “ExternalListGUID” that we can pass in either the DISA STIG 4.3 or DISA STIG 4.2 or other options.

....

"name": "DISA STIG",

"guid": "DISA_STIG",

"id": 13,

"parameters": [

{

"id": 40,

"name": "Options",

"type": "SINGLE_SELECT_DEFAULT",

"description": "DISA STIG Options",

"identifier": "ExternalListGUID",

"reportDefinitionId": 13,

"paramOrder": 0,

"reportParameterOptions": [

{

"id": 5,

"displayValue": "DISA STIG 4.3",

"reportValue": "A0B313F0-29BD-430B-9E34-6D10F1178506",

"defaultValue": true,

"description": "DISA STIG 4.3",

"order": 0

},

{

"id": 6,

"displayValue": "DISA STIG 4.2",

...

Creating the basic request data

First step is to populate some basic parameters

//restClient.js

const requestData = {

"name": name, "note": notes, "type": "ISSUE", "reportDefinitionId": definition.id, "format": format,

"project": { "id": projectId, "version": { "id": 3 } }, "inputReportParameters": []

};

Filling in parameter values

Iterate the parameters in the definition and map each one to a param to be sent to the server with value selected.

Other report definitions may have other parameter types.

//restClient.js

//go through report definition parameters and fill in some data

requestData.inputReportParameters = definition.parameters.map((paramDefinition) => {

const param = {

name: paramDefinition.name,

identifier: paramDefinition.identifier,

type: paramDefinition.type

};

switch (paramDefinition.type) {

case "SINGLE_SELECT_DEFAULT":

//find the report param option that has order 0 (most recent)

param.paramValue = paramDefinition.reportParameterOptions.find(option => option.order === 0).reportValue;

break;

case "SINGLE_PROJECT":

param.paramValue = versionId

break;

case "BOOLEAN":

//send "false" for all values for the sake of example and speed of processing.

//this could be turned into another switch case based on parameter identifier

//such as SecurityIssueDetails or IncludeSectionDescriptionOfKeyTerminology

param.paramValue = false;

break;

}

return param;

});

Create report on server (Schedule for processing)

//restClient.js

restClient.api["saved-report-controller"].createSavedReport({

resource: requestData

}, getClientAuthTokenObj(restClient.token)).then((resp) => {

callback(null, resp.obj.data);

}).catch((error) => {

callback(error);

});;

Track report status

The following example shows how to track the status of processing of this report on the backend. Report generation is an asynchronous process and therefore requires tracking.

//generateReport_spec.js

/**

* Poll the report created in previous test for processing status

*/

it('poll for status ', function (done) {

if (!savedReportEntity) {

return done(new Error("previous report generation failed"));

}

/*

* callback for setTimeout. Will use restClient to get the report status and then, based on returned status, either set another timer or stop execution.

*/

function checkStatus() {

const timeoutSec = 2;

restClient.getSavedReportEntity(savedReportEntity.id).then((savedReport) => {

savedReportEntity = savedReport; //update for next test

switch (savedReport.status) {

case "PROCESSING":

console.log(`${savedReport.name} id(${savedReport.id}) still processing! will check again in ${timeoutSec} seconds`);

setTimeout(checkStatus, timeoutSec*1000);

break;

case "ERROR_PROCESSING":

let msg = `${savedReport.name} id(${savedReport.id}) failed!`;

console.log(chalk.red(msg));

done(new Error(msg));

break;

case "SCHED_PROCESSING":

console.log(`${savedReport.name} id(${savedReport.id}) still scheduled! will check again in ${timeoutSec} seconds`);

setTimeout(checkStatus, timeoutSec*1000);

break;

case "PROCESS_COMPLETE":

console.log(chalk.green(`${savedReport.name} id(${savedReport.id}) completed successfully`));

done();

break;

}

}).catch((err) => {

let msg = `${savedReport.name} id(${savedReport.id}) failed! ` + err.message;

console.log(chalk.red(msg));

done(err);

});

}

checkStatus();

});

Download report

Downloading the report is a simple piping of the http response stream to a file. Similar to the upload FPR calls, the download API URL is not part of the REST API Swagger code generation.

The async.waterfall sequence starts with generating a one-time file download token.

//restClient.js

...

downloadReport(reportId, filename) {

const restClient = this;

return new Promise((resolve, reject) => {

async.waterfall([

/**

* get the single upload token

*/

function getFileToken(callback) {

restClient.generateToken("ReportFileTransferToken").then((token) => {

callback(null, token);

}).catch((error) => {

callback(error);

});

},

The second part of the sequence is a call to download the file. As mentioned previously, the file download in SSC is not part of the Swagger code generation. URL is created, and then a simple response stream piping to a file is used to save the file from that endpoint.

//restClient.js

/**

* use a simple file download pipe from response to save to downloads folder

*/

function downloadReport(token, callback) {

//download the file - for more info on this in SSC navigate to [SSC URL]//html/docs/docs.html#/fileupdownload

const url = config.sscReportDownloadURL + "?mat=" + token + "&id=" + reportId;

try {

let destFolder = __dirname + "/.." + config.downloadFolder;

downloadFile(url, destFolder, filename, (err) => {

if (err) {

callback(e);

} else callback(null, destFolder+ "/" + filename);

});

} catch (e) {

callback(e);

}

}

], function onDoneDownload(err, dest) {

</code></pre>

Overview

This section shows how to print controller names and the API calls they include.

Using Swagger code generation

The code below will use the generated swagger API layer to extract controller names and their avaliable API calls.

//restClient.js

/**

* returns an array of {name: "controller name", apis: ["apifunc1", "apifunc2"...]}

*/

getControllers() {

const restClient = this;

//get list of controllers (includes all Javascript properties so filter out anything not an object)

const controllersNames = Object.keys(restClient.api).filter((item) => {

...

})

//attach to each controller list of funcitons.

const result = controllersNames.map((name)=>{

...

})

return result;

}

Printing Controllers

//apiInfo_spec.js

/* this row will return an object similar to this:

* "{"name":"application-state-controller","apis":["getApplicationState","putApplicationState"]}"

*/

const controllers = restClient.getControllers();

...

Output

...

parser-plugin-image-controller, getParserPluginImage

local-user-controller, multiDeleteLocalUser,listLocalUser,createLocalUser,deleteLocalUser,readLocalUser,updateLocalUser

ldap-server-controller, multiDeleteLdapServer,listLdapServer,createLdapServer,doCollectionActionLdapServer,deleteLdapServer,readLdapServer,updateLdapServer

ldap-object-controller, multiDeleteLdapObject,listLdapObject,createLdapObject,doCollectionActionLdapObject,deleteLdapObject,readLdapObject,updateLdapObject

job-priority-change-category-warning-of-job-controller, listJobPriorityChangeCategoryWarningOfJob

job-controller, listJob,readJob,updateJob,doActionJob

issue-view-template-controller, listIssueViewTemplate,readIssueViewTemplate

issue-template-controller, multiDeleteIssueTemplate,listIssueTemplate,deleteIssueTemplate,readIssueTemplate,updateIssueTemplate

issue-summary-of-project-version-controller, listIssueSummaryOfProjectVersion

...

Create Version

config.js: sscAPIBase, username, password, sampleVersionId (copy state)

Assign User to Versions

config.js: sscAPIBase, username, password, sampleVersionId, sampleSecondaryVersionId

Update Custom Tags of Version

config.js: sscAPIBase, username, password, sampleVersionId, sampleCustomTagId

Create Local User And Assign

config.js: sscAPIBase, username, password, sampleVersionId

Assign Multiple Users

config.js: sscAPIBase, username, password, sampleAuthEntities, sampleSecondaryVersionId

Batch Attribute

config.js: sscAPIBase, username, password, versionIdCSV, batchSize, sampleAttributeValue

Generate a token

config.js: sscAPIBase, username, password, FORTIFY_TOKEN_TYPE (env var)

Download FPR

config.js: sscAPIBase, username, password, sscUploadURL, sampleFPR, sampleFPRVersionId

Upload FPR & Track Processing

config.js: sscAPIBase, username, password, sscUploadURL, sampleFPR, sampleFPRVersionId

Report: Generate, Track & Download

config.js: sscAPIBase, username, password, downloadFolder

title: “Authentication” date: 2017-08-03 11:03:15 -0700 —

Authenticating with SSC

The Swagger spec enforces security schemas for any API call. The call order needed to successfully authenticate with SSC is as follows:

The initialize() function in the restClient.js takes care of generating the UnifiedLoginToken, and the clearTokensOfUser() takes care of cleaning up all the test user tokens at the end of the run.

If you are not familar with the async library please read this. The waterfall function basically runs a set of async functions sequentially. The

callbackmethod tells the next function to start.

Getting the token

api is the reference to the Swagger-generated object.

We call the createAuthToken passing required parameters. In the Promise resolving callback (then), we take the token from the resolve and pass on to the next waterfall handler.

The first parameter in the callback is reserved for error objects.

//restClient.js

...

async.waterfall([

//get the token from SSC using basic http auth

generateToken(type) {

const restClient = this;

return new Promise((resolve, reject) => {

const auth = 'Basic ' + new Buffer(config.user + ':' + config.password).toString('base64');

/* do not create token again if we already have one.

* In general, for automations either use a short-lived (<1day) token such as "UnifiedLoginToken"

* or preferably, use a long-lived token such as "AnalysisUploadToken"/"JenkinsToken" (lifetime is several months)

* and retrieve it from persistent storage. DO NOT create new long-lived tokens for every run!! */

restClient.api["auth-token-controller"].createAuthToken({ authToken: { "terminalDate": getExpirationDateString(), "type": type } }, {

responseContentType: 'application/json',

clientAuthorizations: {

"Basic": new Swagger.PasswordAuthorization(config.user, config.password)

}

}).then((data) => {

//got it so pass along

resolve(data.obj.data.token)

}).catch((error) => {

reject(error);

});

});

}

...

Using auth to test API

Now that we have the token, we will use it to call an API function that SSC has for the purpose of testing connectivity and correctness.

A "list-features" resource action is done on the feature-controller. The results just checks for an error and passes the token along.

//restClient.js

...

},

function heartbeat(token, callback) {

//We have token, so try it out.

//SSC has a "ping" endpoint called features that is very quick (no db or processing)

//getClientAuthTokenObj - see top of snippet, returns an object that instructs Swagger to create a token header

//That is, it will end up sending "Authorization FortifyToken [The token from previous function]

api["feature-controller"].listFeature({}, getClientAuthTokenObj(token)).then((features) => {

callback(null, token);

}).catch((error) => {

callback(error);

});

}

...

Waterfall done

Once the waterfall of async functions is done, we reach the onDoneHandler. All this method does is resolve the Promise that the initialize() method returns. That way, anyone who calls it can use then() and catch().

], function (err, token) {

//This is the global waterfall handler called after all successful functions executed or first error

if (err) {

reject(err);

} else {

that.token = token; //save token

resolve("success");

}

});

}).catch((err) => {

reject(err);

});

})